The Digital Twin Paradox: Data Can Remember – But Physics Knows

The concept of the digital twin has matured. What began as a passive mirror of physical systems has evolved into a strategic, intelligent asset, capable of sensemaking, foresight, and context-driven adaptation. This article explores how digital twins have advanced through successive generations, why physics-based modelling is now essential, and how hybrid approaches like Physics-Informed Neural Networks offer the key to navigating unpredictable, high-risk scenarios—the so-called Black Swan events.

Evolution of the Digital Twin: From Data to Understanding

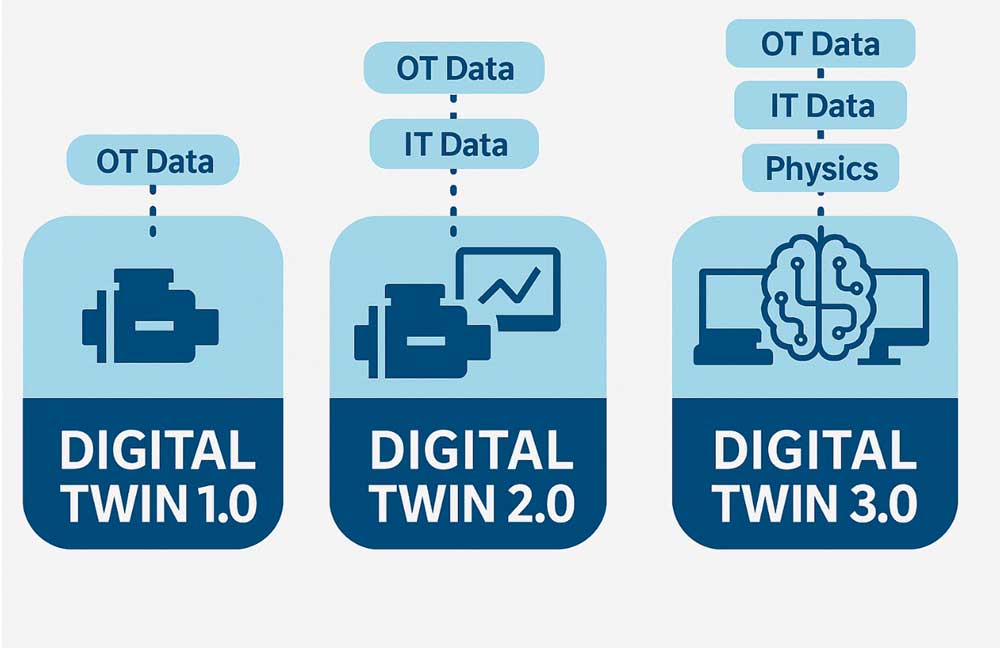

Digital Twin 1.0 emerged from the world of operational technology (OT), characterized by real-time data acquisition and system visualization. These early twins mirrored reality without interpretation. They offered data, but not meaning; measurements, but not insights. Their role was reactive, not proactive. In a way, Digital Twin 1.0 was like a digital photograph—faithful, detailed, and ultimately flat. There was no depth, no sense of consequence, no capacity to engage with time. The twin showed what was, but had nothing to say about what could be.

Digital Twin 2.0 integrated IT and OT systems, expanding the scope to include enterprise data, ontologies, and structured coordination. It allowed visibility across operational and managerial layers, allowing stakeholders to ask, “What can I see and manage in my data?” While it improved situational awareness, it still lacked the ability to predict outcomes or guide actions. It was more like an instrument panel than a mirror—a dashboard that contextualized what had happened, but remained tethered to retrospective logic.

Then came Digital Twin 3.0, and with it, a deeper awareness of limitations. This phase highlighted a growing tension between data science and the reality of industrial systems when operations and maintenance professionals encountered the limitations of purely statistical or black-box machine learning models. Algorithms might detect patterns, but they could not explain them. A prediction without understanding is like a prophecy—possibly correct, but fundamentally unusable.

In this phase, digital twins began to resemble the portrait of Dorian Gray: an image evolving in parallel with the physical object, revealing degradation and change, but leaving us uncertain as to what was driving the transformation. Beyond reflection or replication, we needed reasoning. There was a clear need for digital twins to become trustworthy decision aids—not just dashboards or mirrors. That need laid the groundwork for a new wave of hybrid approaches, in which machine learning was enhanced with physics-based understanding. This shift was not only technical, but also cultural: engineers demanded interpretability, transparency, and causal reasoning, arguing, "Without physics, we guess. With physics, we project."

Data Aren’t Enough

The limitations of purely data-driven methods in industrial contexts are well-documented. Traditional machine learning often fails to generalize to unseen conditions or rare events. It performs well when past patterns are stable, frequent, and well-represented. But the real world rarely behaves so cooperatively. In many cases, these models are trained on narrow slices of history—bounded, biased, and blind to what lies outside them.

When datasets are noisy, incomplete, or suffer from selection bias, models become fragile. Perhaps most critically, they produce results that are difficult for domain experts to interpret. This lack of transparency isn’t merely inconvenient—it can be dangerous. In safety-critical environments like energy, transportation, or manufacturing, trust is not optional. If the model can’t explain itself, engineers won’t act on it.

As systems grow more complex and interdependent, organizations are confronted with a paradox: they have more data than ever before, yet are increasingly unable to convert those data into meaningful decisions. Retrospective analytics focus on past correlations and cannot account for emergent behaviours, cascading faults, or nonlinear dynamics. They can tell us what happened, but not why—or what’s about to happen next.

Even advanced deep learning architectures, powerful as they may be, remain prisoners of their data. They extrapolate patterns; they do not infer causality. They can classify failures but rarely understand failure mechanisms. As a result, they fall short in helping us manage uncertainty, assess risk, or build resilient systems.

Without physics, data are directionless. They show movement but not motive, change but not consequence. What is needed is a new class of models—ones that can reason, generalize, and anticipate. Only by embedding domain knowledge, physical laws, and contextual understanding into our models can we move from surface-level prediction to strategic foresight.

Black Swan Events and Limits of Prediction

Black Swan events—rare, high-impact failures that escape conventional forecasting—pose one of the greatest challenges to modern predictive systems. These events may be triggered by subtle system degradation, unexpected interactions between components, or sudden environmental changes. What makes them especially dangerous is their invisibility in historical datasets: they lie outside the statistical envelope of what has previously occurred.

The core issue is that traditional machine learning is retrospective. It learns only from what it has seen. If a critical failure mode has never been captured in data or has occurred so infrequently that it leaves no meaningful statistical signature, the system remains blind to it. This is the paradox of the Black Swan: the more catastrophic the event, the less likely it is to be represented in our records. Absence of data becomes a dangerous illusion of safety.

In complex, tightly coupled industrial systems, this blind spot is a systemic risk. These systems often operate across wide ranges of physical conditions and are subject to wear, aging, and environmental variation. Over time, they can drift into failure modes that were never present in commissioning or early operation. Machine learning, dependent on narrow training distributions, cannot extrapolate meaningfully into these outlier states.

To address this, we must introduce the laws of physics as a structural layer in our predictive architecture. Physics doesn’t require observation to assert truth—it governs even in the absence of data. By incorporating physical principles such as conservation laws, thermodynamics, structural dynamics, or fluid mechanics into our models, we can give them a broader frame of reference. These principles can become a scaffolding for uncertainty, constraining predictions to remain plausible even when data are incomplete, noisy, or unprecedented.

The integration of physics is not just about increasing accuracy; it is also about building resilience into the logic of prediction. With physical knowledge embedded into them, systems can run simulations of hypothetical conditions, stress-test critical functions, and explore how anomalies might evolve—long before those paths are evident in sensor data.

By moving beyond statistical mimicry and embedding an understanding of how systems behave, we can start to detect the early tremors of Black Swan events. Only then can predictive systems evolve from pattern matchers into risk sentinels.

Articulating Physics through Synthetic Data and Simulation

In the industrial world, the scarcity of failure data isn't just an inconvenience—it’s a fundamental obstacle. Critical failures, while rare and undesirable, are exactly the scenarios predictive models need to understand. But when they do happen, the conditions leading up to them are often chaotic, undocumented, or too hazardous to safely replicate. This creates a structural blind spot: the moments we most need to predict are the ones we least understand.

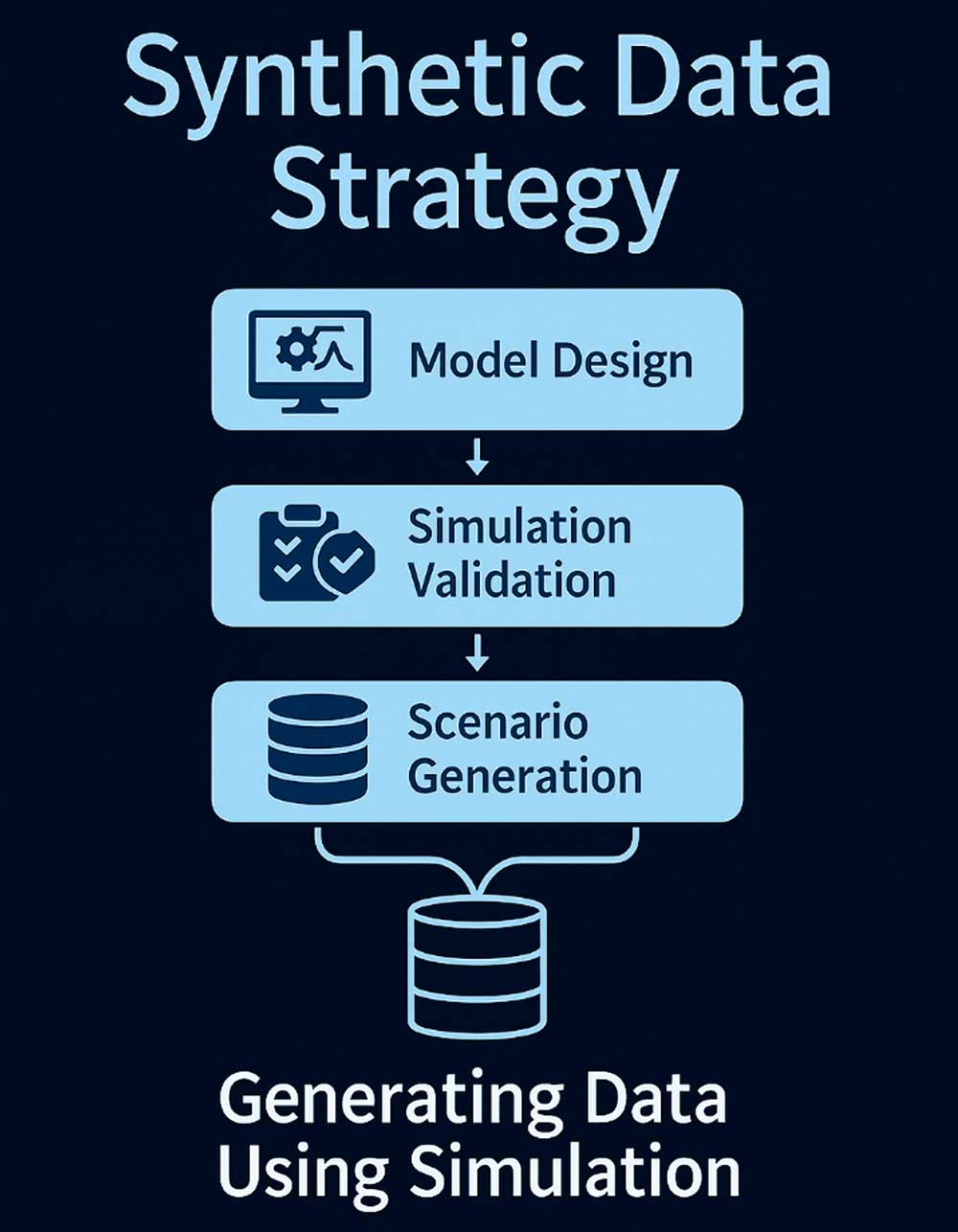

To overcome this, industries are turning to virtual prototyping and physics-based simulation as a new foundation for intelligent modelling. Platforms like Modelica, finite element modelling (FEM), and multi-body simulations allow engineers to recreate both normal and failure-prone behaviours of systems in controlled digital environments. These simulations are not only safe—they are hyper-configurable, enabling us to observe how a component responds under stress, fatigue, corrosion, overload, or even misuse.

The result is a new class of training data: synthetic, scenario-rich, and physically grounded. We can simulate how a rolling bearing degrades under variable loads, how thermal stresses propagate in a turbine, or how a gearbox responds to lubrication loss. Every simulation becomes an experiment—an opportunity to generate labelled datasets that fill the gaps in historical operation.

Techniques such as fault injection, stress testing, and parametric sweeping create data far beyond the reach of real-world experimentation. Because these simulations are based on first-principle physics, the resulting data both reflect possible system behaviours and reinforce the laws governing them.

Digital twins built on this foundation stop being passive reflectors of yesterday’s data. Instead, they become experimental testbeds, able to project future scenarios, evaluate resilience strategies, and validate potential interventions without touching the factory floor. In this way, synthetic data become not a compromise, but a catalyst for more robust, resilient, and explainable predictive models.

Physics-Informed Neural Networks: From Data to Understanding

Synthetic data provide a plethora of rich behavioural patterns, but it’s the learning method that determines how much value we can extract from them. Traditional neural networks, even when trained on large datasets, remain limited by their lack of interpretability and adherence to physical constraints.

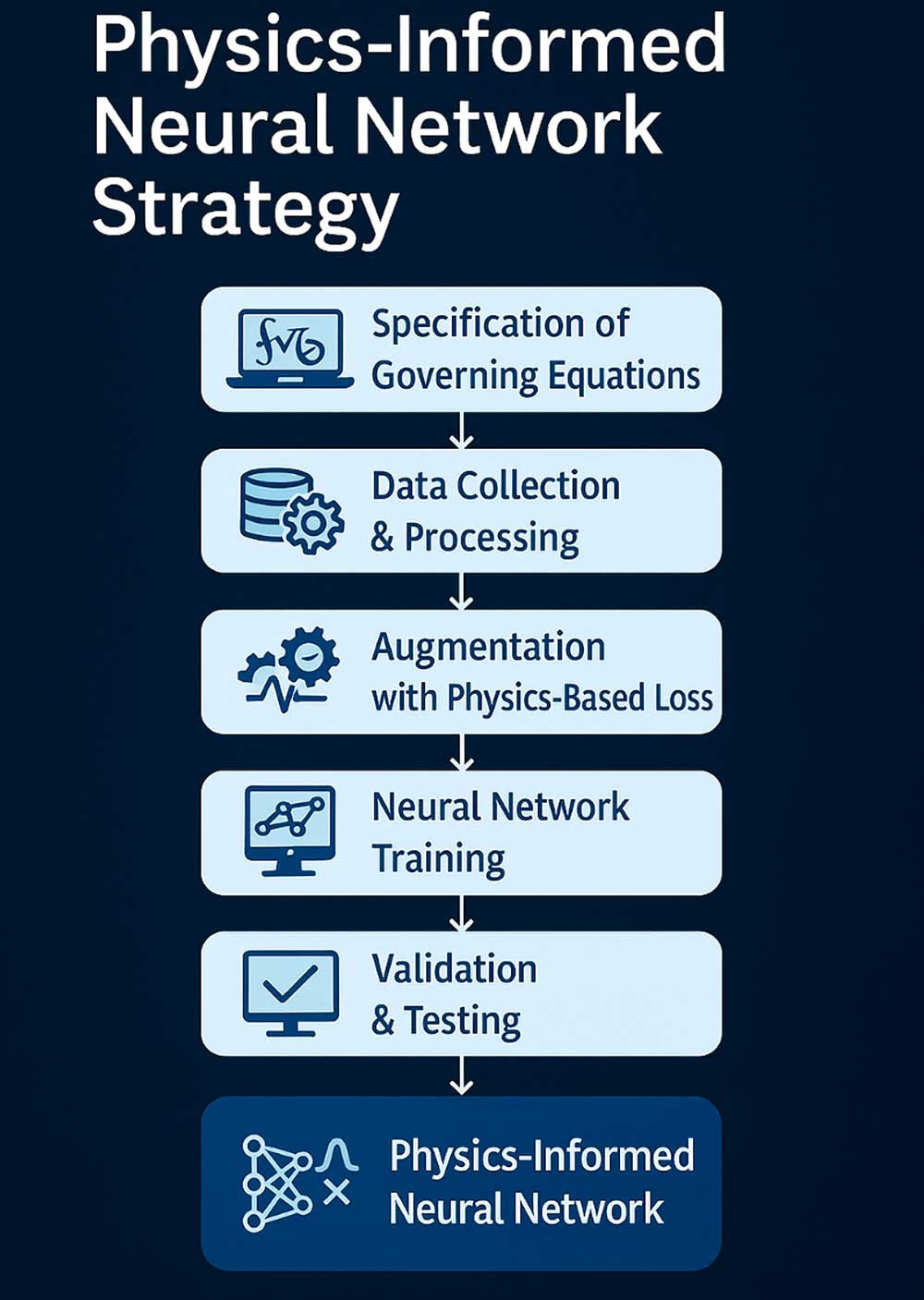

Physics-Informed Neural Networks (PINNs) revolutionize how machine learning models interact with knowledge. Unlike standard networks that learn correlations from data alone, PINNs encode known physical laws, such as partial differential equations, conservation of mass and energy, or thermodynamic boundaries, into the model’s structure. These equations shape the loss functions, enforce behavioural constraints, and inject meaning into every parameter.

This fusion creates models that are not only data-aware but also physics-consistent. PINNs can infer system behaviour in unmeasured conditions, extrapolate to unseen scenarios, and remain faithful to the physical truths engineers depend on. This makes them particularly valuable in data-scarce domains, where historical measurements are insufficient or unreliable.

In the context of digital twins, PINNs act as intelligent intermediaries between simulation and reality. They use synthetic data not just to train, but also to refine and validate system models in real time. Their predictions come with physical justifications, enabling engineers to see the twin not as a black box, but as a knowledgeable collaborator.

PINNs enable faster simulations, more accurate anomaly detection, and predictive capabilities that are both interpretable and grounded. They allow us to pose “what-if” questions, simulate failure paths, and anticipate how systems might evolve, not only statistically, but also structurally. For instance, a PINN model trained on turbine dynamics can predict the onset of blade fatigue long before vibration sensors detect anomalies.

Ultimately, PINNs elevate digital twins from descriptive to prescriptive intelligence. They do not just signal change—they explain it. They do not just see risk—they understand it. And in doing so, they lay the groundwork for a new generation of industrial decision-making, one that fuses data science with engineering judgment in practical and profound ways.

Conclusion: From Echoes to Insight

The evolution of digital twins is not just technological—it is conceptual. Instead of acting as mirrors of the physical world, twins are becoming intelligent agents that combine data, physics, and simulation into decision-ready insight.

Hybrid approaches, especially those using PINNs, represent the frontier of this transformation. They allow us to embed knowledge into our machines, not just feed them numbers. They empower us to detect the swan song of an asset before silence falls.

Most importantly, they offer a path to true contextual intelligence, turning overwhelming complexity into meaningful, actionable understanding. As Europe pushes for digital sovereignty and resilient infrastructure, the time to embed explainable, physics-informed intelligence into our systems is now.

Text: Diego Galar

Photos: iStock, SHUTTERSTOCK Images: Diego Galar

![EMR_AMS-Asset-Monitor-banner_300x600_MW[62]OCT EMR_AMS-Asset-Monitor-banner_300x600_MW[62]OCT](/var/ezwebin_site/storage/images/media/images/emr_ams-asset-monitor-banner_300x600_mw-62-oct/79406-1-eng-GB/EMR_AMS-Asset-Monitor-banner_300x600_MW-62-OCT.png)