Using the Power of Data to Increase Profitability

Most papermakers have an ever-increasing amount of information available to them for problem-solving, planning and decision-making. The question in many mills has become, how do we sift through the data we have to find what we need to improve our operation and make us more profitable?

Data handling and storage technology is one of the fastest developing technologies in the industry. Only 5 years ago our focus was on how to save megabytes, we now don’t even think twice about storing giga- or even terabytes.

What has become a problem: collecting and storing data if we do not put it in to use. The more data that we are confronted with, the more difficult it becomes to make this data work for us. Today’s papermakers use process data to give them an increased understanding of their process. A multitude of information flows through most paper mills, making it time consuming to analyze data and to find exactly what’s needed. Mills look for the ease to manage tools that will assess information and provide clear criteria for making sound decisions.

It is well recognized in the paper industry that any optimization of our processes is usually accompanied by collecting, storing and analyzing data. As a result, the automation landscape surrounding paper machines has become extensive. Less than 40 years ago an average paper machine was equipped with 10 or 20 local controllers controlling for instance the main steam flow, headbox pressure and stockflow. The paper machine of today is very much a highly sophisticated process that cannot be run without the help of an extensive Distributed Control System (DCS) which was initially considered to be helpful and not a necessity. This is no longer true, and systems like the DCS, Quality Control (QCS) and Web Inspection (WIS) have now become key to run the papermaking process. In most cases without these systems the papermaking process will grind to a halt as they combine and control information to-and-from hundreds, or in some cases thousands, of instruments located in and around the paper machine. The simple numbers make it impossible for the operator of today to manage the process of papermaking without some computerized form of help.

The question may be, why have we let it get so far that we cannot make paper anymore without the help of the computer and we often hear how papermakers of the past could make paper without the help of the modern day “digital buddy”. The truth most likely is, that the professional of the past could make paper without the modern day assistant, however it would be of the same quality and at the same costs as many years ago. Past levels of quality and efficiency are not good enough to survive in today’s world. The reason we have so much reliance on our “digital buddy” to make paper, is that we need to make a high quality product in the most optimized way.

We have come a long way in the last 20 years, however there is still room for improvement. We can learn a huge amount on how to do this from our daily life.

Figure 1. Automation lanscape surrounding paper machines.

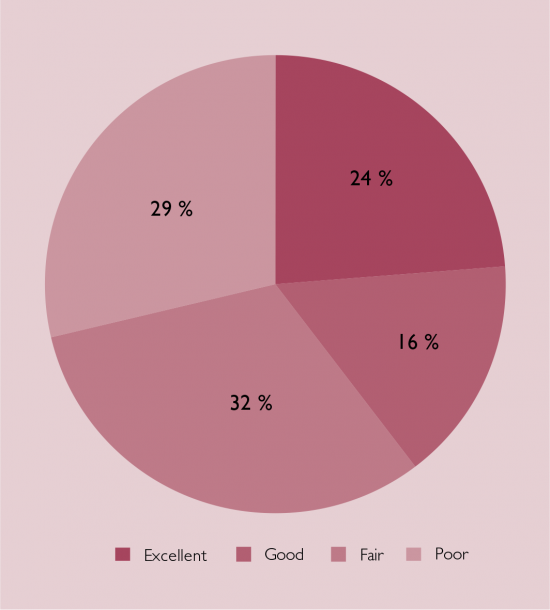

Figure 2. Average performance controllers in DCS-systems. Source: ABB analysis multiple independant DCS-systems in P&P Industry.

For example, today’s automobile combustion engine still operates on the same basics as Nicolaus Otto used in 1876, but when it comes to performance and efficiency the modern combustion engine cannot be compared with Nicolaus Otto’s early designs. Until the 1970’s optimization of the combustion engine was mainly achieved by improvements on the mechanical engineering side, but since the 1980’s combustion engines have been more and more digitized. The combustion engine is equipped with sensors, electronic actuators all controlled by the famous motor management. With the focus on the environment and cost optimization increasing, the combustion engine would have been banned without this digital evolution. The modernization of the combustion engine is a true example of starting with measuring the process, analyzing the data and then using the results to optimize and improve, in many cases in real time closed loop control. The process of collecting and analyzing data of the combustion engineering has led to efficiency improvements of over 100 % in day-to-day use.

If we compare the evolution of the combustion engine to the papermaking process, there are similarities. Most paper machines are equipped with Automation Systems like Distributed Control Systems, Quality Control Systems, Web Inspection Systems, Web Monitoring Systems and Modern Drive Systems. Thousands of gigabytes of information are collected from many data points, an average of 500 control loops (or more in some cases) on a paper machine is today’s standard. We can conclude that the paper industry has this part well under control, we know where and how we want to equip the paper machine with measurements and controls. The question is, are we using this expertise to its full potential?

The answer to this question lies in the success of the systems; the extensive implementation and sheer size of the systems have outgrown our ability to oversee. The vast number of Input / Output signals and associated controls makes it impossible to see the full picture. It is exactly the success of Distributed Control and the ease of implementation that has led to the excessive numbers of control loops and information, making it impossible to optimize and monitor them all. The simple amount of the control loops prevents us from analyzing and optimizing on system implementation or initial machine startup and in 9 out of 10 cases the loops are not revisited or optimized after the implementation.

Careful analysis of a number of running paper machines (figure 2), controlled by systems form different origin, shows the potential clearly; less than 50 % of the implemented loops in the analyzed systems are performing in the categories good or excellent, indicating that there is improvement potential of 50 % or more.

Like Nicolaus Otto’s combustion engine, the actual process of making paper was not changed, but the process has been optimized and fine tuned in many areas, increasing complexity and making it difficult to analyze without the help of the data. To take the next step in making the paper process more efficient it is important that we use the data that we are collecting on a day-to-day basis to optimize and improve efficiency. To do this we could use data to:

- Analyze frequency of failures and defects

- Check and improve the health of the controls

- Check the quality of the end product

- Reduce indirect maintenance

Let’s explain the background of these in more detail.

Using Data to Determine Frequency of Failures and Defects

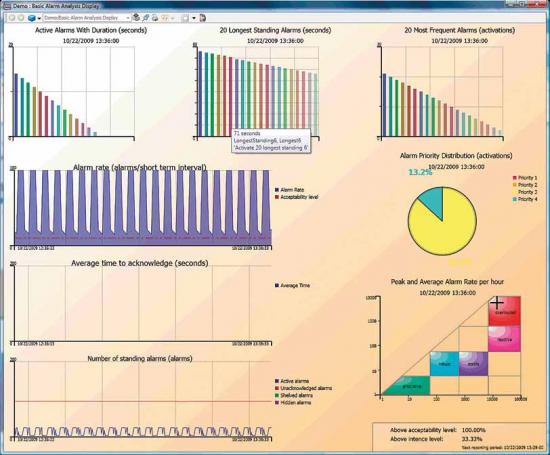

A standard function of Control Systems is reporting alarms, however if we look into how we handle alarms we can immediately see the improvement potential. Due to the large number of data points the systems are managing, a common result is a large number of alarms showing up on the monitors, creating the situation where operators develop a blindness to alarms, missing the critical ones or not recognizing repeating alarms. This results in unwanted process upsets or, not uncommonly, failure situations that last for years and years. If we analyze alarm data we can address and remove dominant and unwanted situations, thus improving the process efficiency. Would it not be highly beneficial if we could analyze for instance (see figure 3):

- The number of Active, Unacknowledged, Hidden, and Shelved alarms

- The Alarm rates and Average, Max number of alarms per hour

- The Average time to acknowledge alarms

- The Percentages of alarms above Acceptability level (user defined)

- The Percentages of alarms above Intense level (user defined)

- The Most frequent alarms

- The alarms that were active for the longest time

- The Still active alarms that were active for the longest time.

Based on a detailed analysis of alarms it is possible to address and remove long term or reoccurring process upsets, allowing operators to focus on things that are of real importance.

Using Data to Check and Improve The Health of the Controls

Although the success of the automation system is the extended functionality packed into it, this however is also one of its biggest downfalls. We cannot oversee, optimize and monitor all this functionality without adapting the way we do it. We try and optimize such a complex system in the same way we use for a single loop controller, working on the one loop that seems to be causing an upset and often forgetting that this loop is part of many integrated and dependent loops.

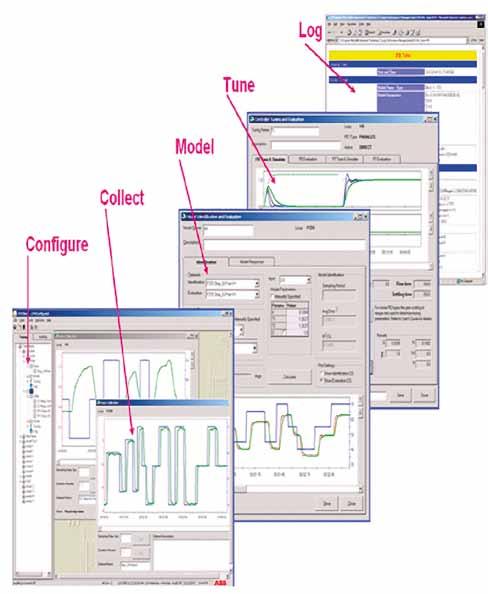

To oversee these complex systems of controls we need to let modern technology work for us. It is a small step from data collection, already implemented in DCS systems, to analyzing the performance of the controls. The control performance in its turn can then be translated into easy to understand results, indicating to us where we should focus our efforts.

Based on calculated control loop and final control element (control valve) performance indices, we can then easily categorize for instance the “Top Ten” badly performing control loops or control elements. Plantwide Disturbance Analysis can be determined by looking for the root cause when groups of loops are performing poorly.

Loop Performance Analysis tools (figure 4) are provided with concrete guidelines on where to focus our efforts in optimizing the process, eliminating hidden disturbances and helping us to get to the root cause. It would not be the first time that a control loop is working hard on eliminating a problem introduced by another control loop.

Figure 3. Example of analysed alarm data.

Using Data to Check the Quality of the End Product

Another source of process data that is not used to its full potential is the Variance Partition Analysis, also known as VPA reports. Generated by Quality Control Systems these are mostly known as reel or grade reports, showing the 2-sigma values which are commonly used by paper mills to determine and report the quality of the paper that is being produced and if it is within specification. However the VPA has significant potential to improve the efficiency of the process and the quality of the end product that is seldom used.

VPA reports give an excellent summary of the product that is being produced and can be a great help when analyzing possible improvement potential and where this potential can be found. The VPA in principle provides a snap shot of the produced product in 4 areas: short term variation and long term variation in machine direction, variation in cross direction and the total sum of these. By comparing and benchmarking the data in these reports, a fast analysis can be made of the improvement potential of the process; this analysis will also provide a first insight in the areas where the potential lies.

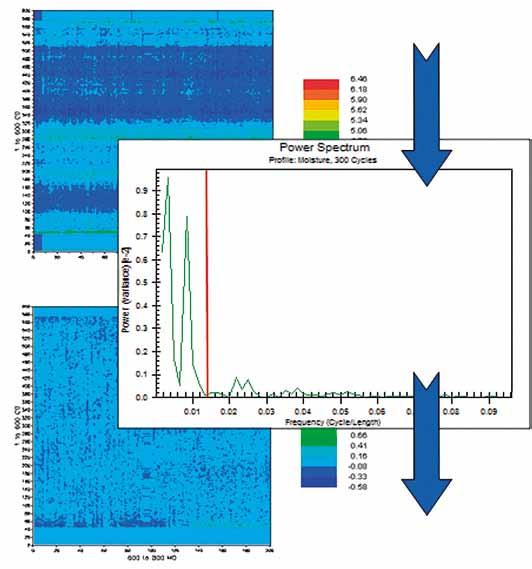

Based on the results of the VPA analysis a more in-depth investigation into the process can be initiated, and high speed data can be captured from the online measurements. The collected data can then be analyzed in several ways, helping to determine the reason for process disturbances and assist in removing these. For instance, by determining dominant frequencies it is possible to compare these with the known frequencies of the process and find the source of the disturbance.

Figure 4. Loop Performance manager.

Another well proven analysis is to use the high frequency data to determine the quality and improvement potential of cross machine, also known as profile control (figure 5). Profile data analysis will help to determine if there is any room for improvement in the different areas, for instance:

- Edge problems (deckle waves, edge flows)

- Global and local mapping issues

- Model mismatch

- Tuning issues (I versus PI)

- Influences from wire and felt cleaning

- Startup or sheet break recovery issues

- Grade dependent CD tuning.

Figure 5. Profile Capacity Analysis.

Using Data to Reduce Indirect Maintenance

Several global players have concluded that maintenance costs are the largest controllable expenditure in their plants and that in some cases these costs meet the annual net profit. If we look deeper into the background of these costs, it becomes even more apparent that there is room for improvement in this field. In many cases the major part of maintenance costs is a result of non-corrective actions, and maintenance departments can spend more than 60 % of their time on reporting, reading documentation, doing routine checks and other indirect maintenance actions leading to lower return on assets.

There are ways that we can improve this. One example is the horizontal integration of field devices, for instance via FDT-DTM or EDDL, that enable the tunneling of information between field devices from independent suppliers and the DCS system. Once in the DCS system, field device status, up-todate documentation and calibration information is only a mouse click away, eliminating time consuming searching of the desk for the right information. The devices monitor intelligently their health and status together with possible critical errors and automatically report to the DCS system or in some cases via email or sms to the maintenance department.

This integrated approach enables Real-Time Plant Asset Management, integrating the real time world of the field device via the DCS system to the Asset Monitoring, that in its turn integrates via the Asset Condition Reporting with the Maintenance Management Systems. This kind of integration reduces room for error as a result of Human intervention.

Let’s take for instance the scenario where a simple valve fails. Due to the device integration the failure will immediately be reported to the DCS. The operator simply selects the defect valve on the screen and requests a work order for the maintenance department on the DCS system. The DCS has all the information belonging to this valve, combines this information and triggers a work-order request on the maintenance management system that in its turn creates, stores and manages the actual work order for the maintenance department. Due to the high level of integration, the work order will contain all the correct data as this was received from the DCS system. It is easy to see that this has major savings, as there is no need to manually enter data and the room for error is drastically decreased.

These examples of how storing and using data can help us improve our efficiency and product quality are just four of many. Over the years we have effectively created the tools to improve our effectiveness, but we can take this a step further by bringing the data that all these individual systems collect and store together.

A modern automation system takes data integration to the next level and integrates advanced data management tools, like asset management, maintenance management, loop performance management and process management into the standard DCS functionality, providing decision making criteria that improve profitability and efficiency. If we want to improve, enhance and become more efficient, like for instance the way the combustion engine has developed, then we need to make use of the “Power of Integration”.

»»About the author Born in the UK Paul moved to the Netherlands early in life and completed his training with a Bsc degree in Measurement and C ontrol. Paul started his career with ABB in 1989 as a Quality C ontrol Systems Service Engineer for the Pulp & Paper Industry, assisting customers with system and process issues. After 7 years in service and project Paul continued his career in sales first in the Netherlands, then the Benelux and now in Central Europe.

![EMR_AMS-Asset-Monitor-banner_300x600_MW[62]OCT EMR_AMS-Asset-Monitor-banner_300x600_MW[62]OCT](/var/ezwebin_site/storage/images/media/images/emr_ams-asset-monitor-banner_300x600_mw-62-oct/79406-1-eng-GB/EMR_AMS-Asset-Monitor-banner_300x600_MW-62-OCT.png)